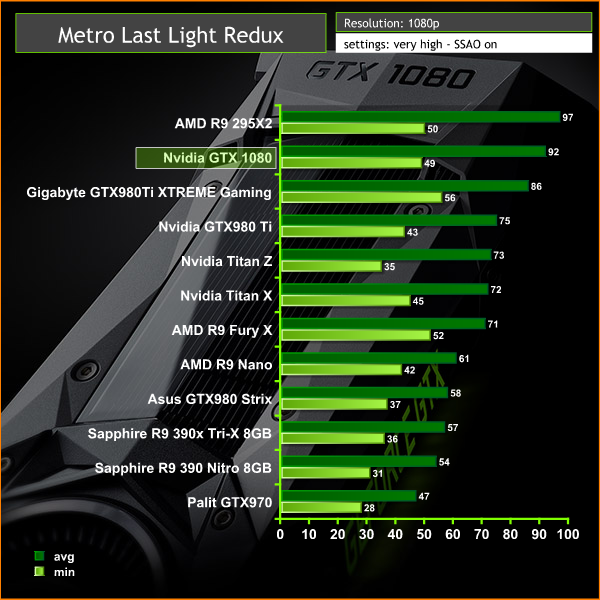

66FPS difference across all test passes may establish one device as technically superior. In Metro: Last Light, where we see nearly 0 deviation run-to-run, a 64FPS vs.

The goal is to establish a confidence interval for each game, so that we may then establish whether differences in framerate are either functionally identical or legitimately different. We use standard deviation to help build our error margins on bar charts, helping establish the difference between a margin that is statistically insignificant and immeasurably different, one that is not appreciably different but measurably different, and one that is appreciably and measurably different.Ī reminder before we start: this isn’t about vendor A vs B. If the goal is a hardware benchmark and a game is behaving in outlier fashion, and also largely unplayed, then it becomes suspect as a test platform. Sometimes, testing a game that has highly variable performance can still be valuable – primarily if it’s a game people want to play, like PUBG, despite having questionable performance. If it’s just the game, we must then ask the philosophical question of whether it’s the game we’re testing, or if it’s the hardware we’re testing. If a game is inaccurate or varies wildly from one run to the next, we have to look at whether that variance is driver-, hardware-, or software-related. Looking at statistical dispersion can help understand whether a game itself is accurate enough for hardware benchmarks. This is why conducting multiple, shorter test passes (see: benchmark duration) is often preferable to conducting fewer, longer passes after all, we are all bound by the laws of time. Games on bench cycle regularly, so the purpose is less for game-specific standard deviation (something we’re now addressing at game launch) and more for an overall understanding of how games deviate run-to-run. Today, we’re looking at standard deviation and run-to-run variance in tested games. As we stated in the first piece, we ask that any content creators leveraging this research in their own testing properly credit GamersNexus for its findings. Our first information dump focused on benchmark duration, addressing when it’s appropriate to use 30-second runs, 60-second runs, and more.

#How to metro last light benchmark series

The goal of this series is to help viewers and readers understand what goes into test design, and we aim to underscore the level of accuracy that GN demands for its publication. We haven’t yet implemented the 2018 test suite, but will be soon. As part of our new and ongoing “Bench Theory” series, we are publishing a year’s worth of internal-only data that we’ve used to drive our 2018 GPU test methodology.

0 kommentar(er)

0 kommentar(er)